How do I use zamba?

User-friendly web application

Zamba Cloud is a web application where you can use the zamba algorithms by just uploading videos or pointing to where they are stored. Zamba Cloud is created for conservation researchers and wildlife experts who aren’t familiar with using a programming interface. If you’d like all of the functionality of zamba without any of the code, this is for you!

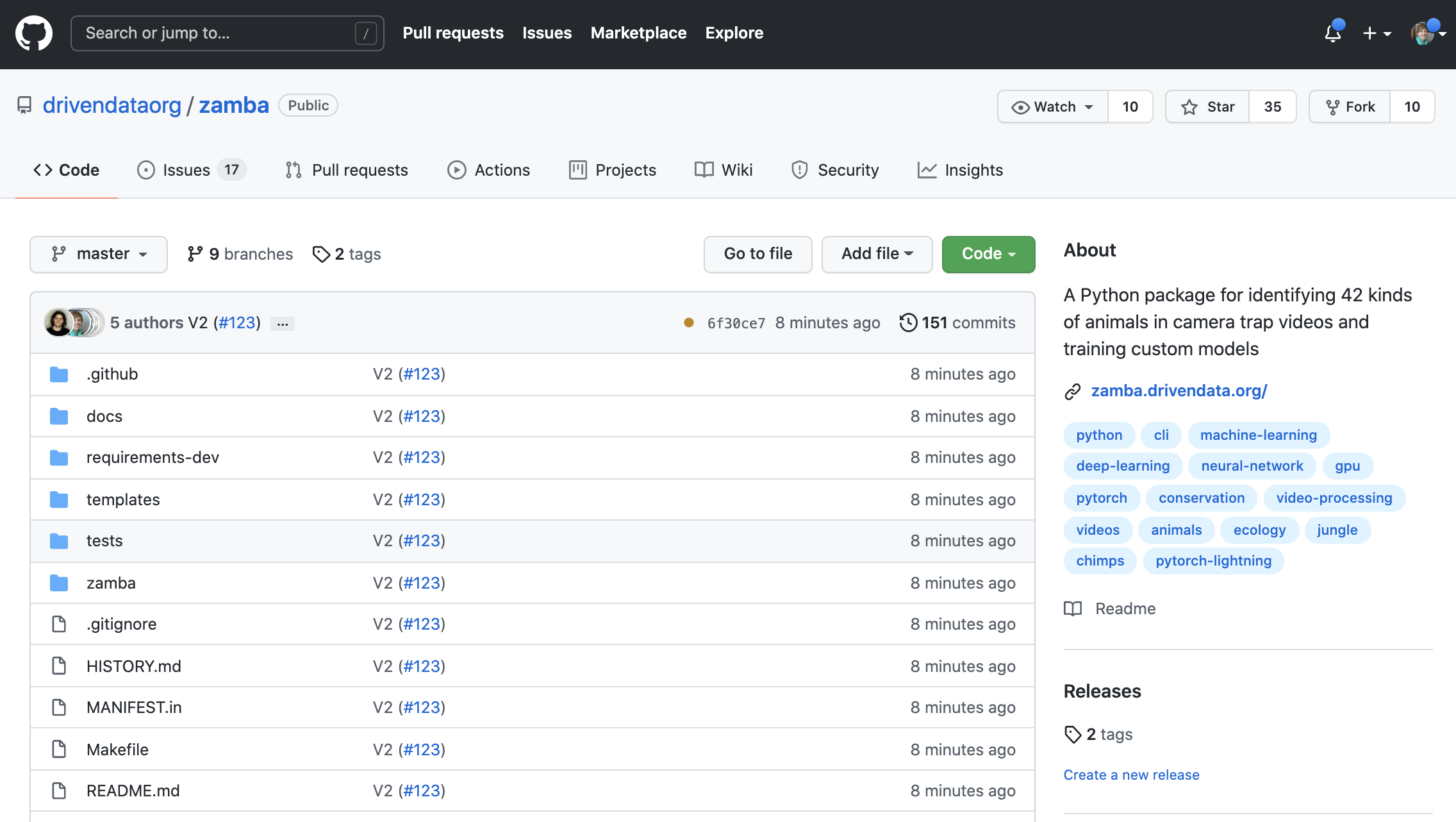

Explore Zamba Cloud →Open-source Python package

Zamba is also provided as an open source package that can be run in the command line or imported as a Python library. If you’d like to interact with zamba through a programming interface, hack away! Visit the zamba package documentation for details and user tutorials.

Explore the Zamba package →