What can zamba do?

Classify unlabeled videos

Zamba includes multiple state-of-the-art, pretrained machine learning models for species and blank detection in different geographies.

| Pretrained model | Recommended use | Training data |

|---|---|---|

| African species identification | Species classification in jungle ecologies | ~250,000 camera trap videos from Central, West, and East Africa |

| European species identification | Species classification in non-jungle ecologies | The African species identification model is finetuned with ~13,000 additional videos from camera traps in Germany |

| Blank vs. non-blank | Classifying videos as either blank or containing an animal, without species identification | All data from both African and European models (~263,000 videos) |

The African species identification models can detect 32 species.

|

|

|

The European species identification model can detect 11 species.

|

|

|

Example use cases:

- You want to identify which camera trap videos contain a given species, and your species of interest are detected by one of the pretrained models.

- You want to identify which of your videos are blank and which are not. The species in your videos do not need to be exactly the same as those in a pretrained model, but the context should be relatively similar. For example, underwater footage is likely out of the depth (literally and metaphorically) of the blank vs. non-blank model.

Train a custom model

Zamba can be used to train custom models on new species and geographies based on user-provided labeled data.

Example use cases:

- You want to identify species not detected by any of the pretrained models, and you have labeled videos of those additional species.

- You have additional labeled videos of species detected by one of the pretrained models, but would like a custom model that performs better in your specific context.

How this works

The retraining process starts with one of the official models (see table above). The model then continues training on any new data provided, including predicting completely new species — the world is your oyster! (We'd love to see a model trained to detect oysters.) The custom model can then be used to generate predictions on new, unlabeled videos.

How do I use zamba?

User-friendly web application

Zamba Cloud is a web application where you can use the zamba algorithms by just uploading videos or pointing to where they are stored. Zamba Cloud is created for conservation researchers and wildlife experts who aren’t familiar with using a programming interface. If you’d like all of the functionality of zamba without any of the code, this is for you!

Explore Zamba Cloud →Open-source Python package

Zamba is also provided as an open source package that can be run in the command line or imported as a Python library. If you’d like to interact with zamba through a programming interface, hack away! Visit the zamba package documentation for details and user tutorials.

Explore the Zamba package →As part of the Pan African Programme: The Cultured Chimpanzee, over 8,000 hours of camera trap footage has been collected across various chimpanzee habitats from camera traps in 15 African countries. Labeling the species in this footage is no small task. It takes a lot of time to determine whether or not there are any animals present in the data, and if so, which ones.

To date, thousands of citizen scientists have manually labeled video data through the Chimp&See Zooniverse project. In partnership with experts at The Max Planck Institute for Evolutionary Anthropology (MPI-EVA), this effort fed into a well-labeled dataset of nearly 2000 hours of camera trap footage from Chimp&See's database.

Using this dataset, DrivenData and MPI-EVA ran a machine learning challenge where hundreds of data scientists competed to build the best algorithms for automated species detection. The top 3 submissions that were best able to predict the presence and type of wildlife across new videos won the challenge and received €20,000 in monetary prizes. The winning techniques developed from this challenge provided a starting point for the algorithms behind Project Zamba.

Zamba means forest in Lingala. Lingala is one of many Bantu languages of central Africa, and is spoken throughout the Democratic Republic of the Congo and the Republic of the Congo. The first ever Homo sapiens emerged in African forests and savannas, and African zambas may hold the keys to unlocking critical mysteries of human evolution.

Contribute to the project repository

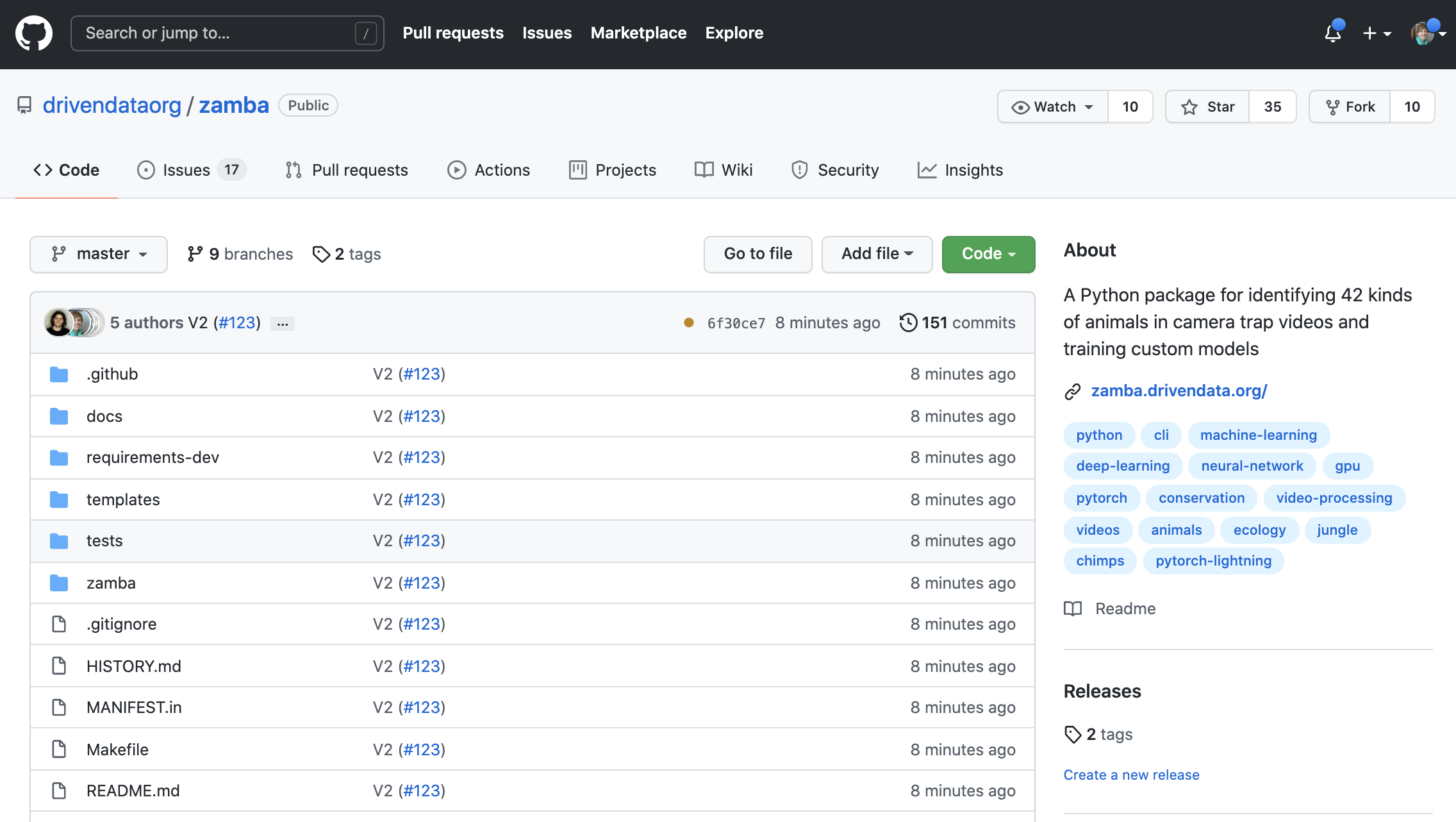

The code developed for Project Zamba is openly available for anyone to learn from and use. You can find the latest version of the project codebase on GitHub.

Watch Star Fork Download

Thanks to all the participants in the Pri-Matrix Factorization Challenge! Special thanks to Dmytro Poplovskiy (@dmytro), developer of the top-performing solution adapted for Project Zamba, the project team at the Max Planck Institute for Evolutionary Anthropology for organizing the competitions and the data, and to the ARCUS Foundation for generously funding this project.